Government

Healthcare Security & Privacy Challenges of ChatGPT, AI Tools

Recent advances in Generative AI Large Language Models, such as ChatGPT, have been making waves across various industries, not least in healthcare. With…

Recent advances in Generative AI Large Language Models, such as ChatGPT, have been making waves across various industries, not least in healthcare. With the ability to converse with users much like a friend, adviser, or assistant, these models have a broad appeal and immense potential. Their user-friendly nature is democratizing access to AI and stirring a cauldron of innovation, with healthcare emerging as a field ripe for exploration.

Nevertheless, as with any powerful tool, there’s a double-edged sword at play here. The very attributes that make these tools valuable—autonomy, adaptability, and scale—can also be exploited for malevolent ends. While we revel in the promise of transformative applications, growing apprehensions regarding misuse and abuse loom in the background.

As we stride into this brave new world of AI-enabled healthcare, the challenge before us is not just about harnessing the power of these solutions. It’s also about developing safeguards that allow us to tap into their value while mitigating risks associated with their use. Let’s delve into this exciting yet complex landscape, examining how to maximize benefits and minimize potential pitfalls.

Understanding Generative AI Large Language Models

Generative AI Large Language Models like ChatGPT utilize advanced machine learning to generate text resembling human communication. Trained on extensive datasets consisting of billions of sentences using “transformer neural networks,” these models excel at predicting the next sequence in a text string, akin to an ultra-advanced auto-complete.

This goes well beyond simply reproducing learned data, instead synthesizing patterns, themes, and structures to produce novel outputs. This impressive capability expands the scope for diverse applications, from patient interaction to medical literature review, heralding an exciting age of AI-assisted healthcare.

Applications in Healthcare

AI models are transforming healthcare with an increasing range of applications. They are used in medical triage and patient engagement, where AI chatbots guide patients based on their symptoms, enhancing healthcare accessibility. AI models also assist physicians by providing evidence-based recommendations for clinical decisions. Companies like eClinicalWorks are integrating AI into their systems to reduce administrative tasks. Additionally, AI models have ventured into mental health support, offering therapeutic interactions. Future prospects are extensive, from personalized patient education and routine task automation to aiding in pharmaceutical research. As innovation progresses, the potential applications of AI in healthcare seem boundless.

The Benefits of AI-Language Models in Healthcare

One of the main benefits of AI language models in healthcare is their ability to enhance efficiency and accessibility. For example, AI triage and patient engagement tools can provide round-the-clock service, reducing wait times and allowing patients in remote or underserved areas to access essential healthcare advice.

Moreover, these models democratize health information, offering clear, understandable insights to patients and promoting more informed decision-making. This is crucial in a field where comprehension gaps often impede patient engagement and treatment adherence. Another key advantage is the personalization of care. AI models can tailor their responses to individuals, potentially improving the relevance and effectiveness of health advice, educational materials, and therapeutic interactions.

Additionally, AI language models could augment the accuracy and consistency of medical decisions. They can synthesize vast amounts of research, past patient data, and guidelines in real-time, offering clinicians decision support based on the latest evidence. When harnessed properly, these benefits could revolutionize patient experiences, clinical decision-making, and healthcare administration, driving a new era of efficient, personalized, and data-driven care.

The Dark Side: Potential Misuse and Ethical Considerations

While AI language models promise transformative benefits in healthcare, potential misuses and ethical challenges loom. One concern is the propagation of misinformation if models generate outdated or incorrect health information, posing a substantial risk in a field where accurate information is crucial.

The potential for AI tools to be exploited for malicious ends, such as improving phishing attacks or generating sophisticated malware, presents considerable cybersecurity threats. Privacy issues also arise as AI models, trained on extensive datasets, might unintentionally leak sensitive information. Bias in training data can lead to unfair outputs, highlighting the ethical issue of accountability in AI decision-making. Furthermore, the “black box” nature of AI complicates transparency and trust, critical factors for widespread adoption in healthcare. Lastly, increased technological dependence risks eroding human skill in identifying technological faults.

Addressing these concerns is essential to leverage AI’s benefits in healthcare without compromising safety, privacy, and ethical standards. As we delve deeper into the world of AI, striking this balance becomes an ever-evolving challenge.

Risks of Attacks on the AI Solutions Themselves

AI language models aren’t just tools; they’re also potential targets. Data or AI poisoning attacks can corrupt AI responses by injecting misleading information into the training sets. Prompt injection attacks present another risk, potentially revealing proprietary business information about the AI deployment. For instance, a recent experiment by a Stanford student prompted Bing’s AI model to disclose its initial instructions, typically hidden from users. Indirect prompt injection attacks are also emerging, where third-party attackers manipulate the prompt, opening the door to data theft, information ecosystem contamination, and more.

Even ChatGPT, a leading AI model, recently fell victim to a breach. Credentials of over 100,000 users were stolen and appeared for sale on the Dark Web, potentially exposing all information these unlucky users submitted to ChatGPT. These incidents highlight that as we advance in AI technology, security measures must concurrently evolve, ensuring the protection of both the tools and their users from the growing complexity of cyber threats.

Balancing the Benefits and Risks

Navigating AI-enabled healthcare requires a delicate equilibrium between embracing benefits and countering risks. Key to this balance is the formulation and enforcement of comprehensive regulations, demanding close collaboration between regulators, technology developers, healthcare providers, and ethicists.

Adopting ethical AI frameworks, like those proposed by global health organizations, can guide our way, focusing on transparency, fairness, human oversight, privacy, and accountability. Unfortunately, creating regulations and ethical frameworks is particularly difficult when we do not fully understand the implications of these AI technologies. Too many restrictions will stifle technological advancement, and too little may have catastrophic, life-threatening implications.

Regular audits of AI systems are equally critical, facilitating early detection of misuse or unethical practices and guiding necessary system updates. Further, cultivating a culture of responsibility among all stakeholders, from developers and healthcare providers to end-users, is essential for ensuring ethical, effective, and safe AI applications in healthcare. We can harness AI’s transformative potential in healthcare through concerted efforts and stringent checks while diligently minimizing associated risks.

The Future of AI in Healthcare

AI’s future in healthcare promises transformative potential, with AI roles expanding into predictive analytics, drug discovery, robotic surgery, and home care. Trends indicate a rising use of AI in personalized medicine, where large datasets enable more personalized, predictive, and preventive care. This could shift treatment strategies from disease response to health maintenance. AI will also be crucial in managing burgeoning healthcare data, efficiently analyzing vast quantities to inform decision-making and enhance patient outcomes. However, the success of AI in healthcare hinges on health IT professionals, who bridge technology and care, ensuring effective system implementation, ethical usage, and continuous improvement. Their pivotal role will shape a future where AI and healthcare merge, delivering superior care for all.

Summing Up

AI-enabled healthcare presents an exciting journey with tremendous potential and notable challenges. AI language models can revolutionize healthcare, yet, potential misuse, privacy, and ethical concerns necessitate careful navigation. Balancing these aspects requires robust regulations, ethical frameworks, and diligent monitoring. Yet, this, too, has its risks. Health IT professionals’ roles are vital in shaping this future. Our stewardship of this technology is crucial as we launch a new era where AI and humans collaboratively enhance healthcare. We hold the key to optimizing AI’s potential while minimizing risks. The future of healthcare, powered by AI, is in our hands and promises to be extraordinary.

About Jon Moore

Jon Moore, MS, JD, HCISPP, Chief Risk Officer and SVP at Clearwater, a company combining deep healthcare, cybersecurity, and compliance expertise with comprehensive service and technology solutions to help organizations become more secure, compliant, and resilient. Moore is an experienced professional with a background in privacy and security law, technology, and healthcare. During an 8-year tenure with PricewaterhouseCoopers (PwC), Moore served in multiple roles. He was a leader of the Federal Healthcare Practice, Federal Practice IT Operational Leader, and a member of the Federal Practice’s Operational Leadership Team.

Among the significant federal clients supported by Moore and his engagements are: The National Institute of Standards and Technology (NIST), the National Institutes of Health (NIH), the Indian Health Service (IHS), the Department of Health and Human Services (HHS), U.S. Nuclear Regulatory Commission (NRC), Environmental Protection Agency (EPA), and Administration for Children and Families (ACF). Moore holds a BA in Economics from Haverford College, a law degree from Penn State University’s Dickinson Law, and an MS in Electronic Commerce from Carnegie Mellon’s School of Computer Science and Tepper School of Business.

ai

health it

machine learning

personalized medicine

healthcare

medicine

medical

pharmaceutical

research

department of health

Here Are the Champions! Our Top Performing Stories in 2023

It has been quite a year – not just for the psychedelic industry, but also for humanity as a whole. Volatile might not be the most elegant word for it,…

AI can already diagnose depression better than a doctor and tell you which treatment is best

Artificial intelligence (AI) shows great promise in revolutionizing the diagnosis and treatment of depression, offering more accurate diagnoses and predicting…

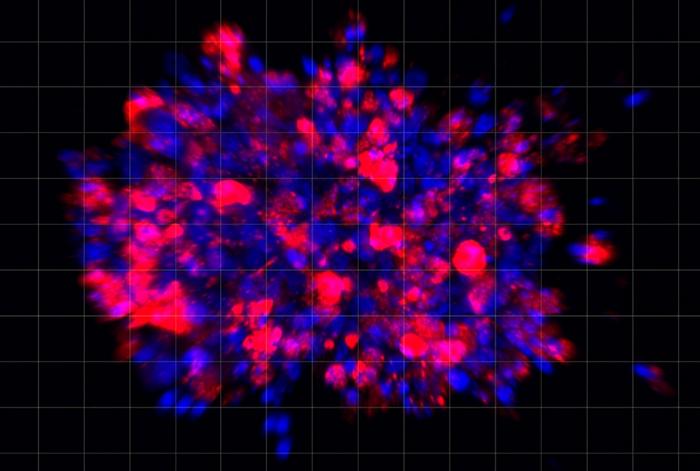

Scientists use organoid model to identify potential new pancreatic cancer treatment

A drug screening system that models cancers using lab-grown tissues called organoids has helped uncover a promising target for future pancreatic cancer…