Digital Health

This Website Wants to Use AI to Make Models Obsolete

The site reminds us of the limitations of AI—how AI-generated images are very stiff and easy-to-spot, but also biased in many ways.

A developer from the Netherlands has created an AI photo studio and modeling agency called Deep Agency. For $29 dollars a month, you can get high-quality photos of yourself in a number of backgrounds as well as generate images of AI models based on a given prompt. “Hire virtual models and create a virtual twin with an avatar that looks just like you. Elevate your photo game and say goodbye to traditional photo shoots,” the site reads.

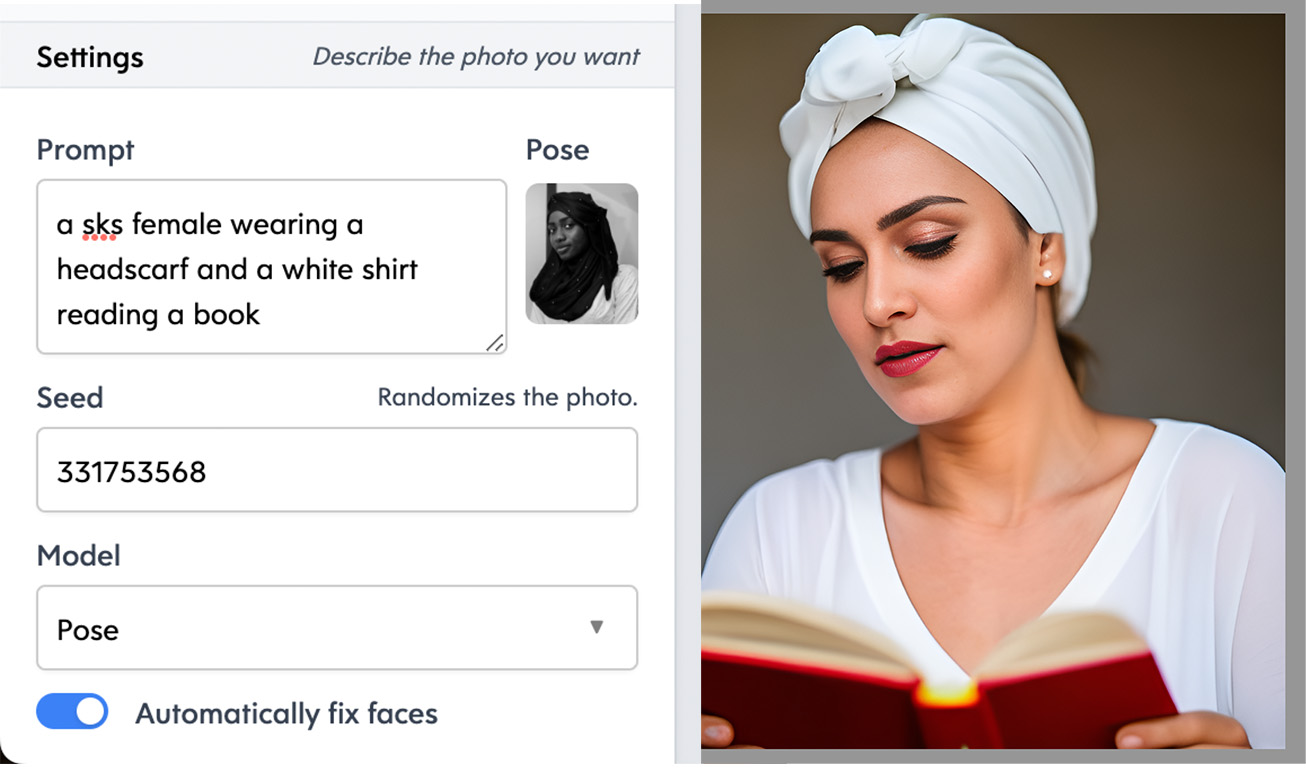

According to the creator, Danny Postma, the platform uses the latest text-to-image AI models, which would mean a model like DALL-E 2, and is accessible everywhere in the world. On the platform, you can customize your photo by choosing the model’s pose and writing different descriptions of what you want them to do.

Rather than rendering models, photographers, and creatives obsolete, this site does quite the opposite. Postma does say on Twitter that the site is “in open beta” and that “things will break,” and upon using the site, it does in fact feel almost silly, like a glorified version of DALL-E 2 but if you were only allowed to generate female models. The site, then, reminds us of the limitations of AI, how AI-generated images are very stiff and easy-to-spot, but also biased in many ways.

So far, the prompt requires you to include “sks female” in it for the model to work, meaning the site only generates images of women unless you purchase a paid subscription, which unlocks three other models, one woman and two men, and allows you to upload your own images to create an “AI twin”.

Are you a model worried about AI replacing you? Are you using AI as part of your work now? We’d love to hear from you. Using a non-work phone or computer, you can contact Chloe Xiang by email at chloe.xiang@vice.com

To generate an image, you type a prompt, choose a pose based on the site’s existing catalog of images and pick from a number of settings including “time & weather”, “camera”, “lens & aperture”, “shutterspeed”, and “lighting.” It doesn’t seem that any of those settings are keyed in yet, as most generated images appear as the same brightly lit female portrait, pictured in front of a very blurred background.

When you use the prompt to say “sks female,” it automatically generates an image of a blonde white woman, even if you selected an image of a woman of a different race or likeness in the catalog. You have to add additional words delineating race, age, and other demographic characteristics if you want to change the model’s look. For example, when Motherboard selected one of the site’s pre-existing images and corresponding prompts of a person of color wearing a religious headscarf to generate an image based on it, the produced image was a white woman wearing a fashion headscarf.

OpenAI’s DALL-E 2 text-to-image generator has already been proven to be filled with baked-in biases. For example, when prompted to produce an image of “a flight attendant,” the generator produces only images of women, and when it’s asked to produce an image of a CEO, it mostly displays images of white men. Though examples like these regularly appear, it has been hard for OpenAI to pinpoint the exact origins of the biases and fix them, even though the company has addressed that it is working on improving its system. The deployment of a photo studio on the basis of a biased model will inevitably carry the same issues.

This AI model generator comes at a time when the modeling industry has already been under great pressure to diversify its models. What once was an exclusive industry with a singular body and image standard has now become more open to everyday models after immense backlash from the public and includes people cast from the street and platforms like Instagram and TikTok. Though in the world of high fashion, representation still has a long way to go, people have taken to create their own style-inclusive content on social media, and proved that people prefer the more personable, casual “model”—in the form of influencers.

Simon Chambers, director at modeling agency Storm Management, told Motherboard in an email that “AI avatars could also be used instead of models but the caveat here is that compelling imagery needs creativity & emotion, so our take, in the near future, is that AI created talent would work best on basic imagery used for simple reference purposes, rather than for marketing or promoting where a relationship with the customer needs to be established.”

“That said, avatars also represent an opportunity as well-known talent will, at some point, be likely to have their own digital twins which operate in the metaverse or different metaverses. An agency such as Storm would expect to manage the commercial activities of both the real talent and their avatar. This is being actively discussed but at present it feels like the metaverse sphere needs to develop further before it delivers true value to users and brands and becomes a widespread phenomenon,” he added. Chambers also said their use has implications under the GDPR, the European Union’s data protection law.

It’s hard to say what Deep Agency’s AI-generated models will be used for, considering you can’t generate models to wear specific logos or hold branded products. When Motherboard tried getting the platform to generate an image of a woman eating a hotdog, the hotdog instead appeared on the woman’s head and she had her finger to her lips, looking ponderous.

The idea of an AI model has been in the works for years now. Model Sinead Bovell wrote a piece in Vogue in 2020 saying that she thinks artificial intelligence will soon take over her job. She referred to the emergence of CGI, not AI-generated models, such as Miquela Sousa, who is known as Lil Miquela on Instagram and has nearly 3 million followers. She has her own character narrative and has partnered with brands such as Prada and Samsung. Bovell said the next step after CGI models are AI models who can walk, talk, and act, referencing a company called DataGrid which made a number of models using generative AI in 2019.

Deep Agency’s images, on the other hand, are much less three-dimensional and bring us back to the question of privacy in AI images. Deep Agency says it uses an AI system trained on public datasets in its Terms and Conditions. It is likely, then, that these images resemble the likenesses of real women in existing photographs. Motherboard reported that the LAION-5B dataset, which was an open-source dataset used to train systems like DALL-E and Stable Diffusion, included many images of real people, from their headshots to medical images, without permission.

Lensa A.I., an app that became viral in December that allowed people to generate images of themselves on different backgrounds using AI, has since been under fire for a number of privacy and copyright reasons. Many artists referred back to the LAION-5B dataset, where they found their art was used in the set without their knowledge or permission and said that the app, using a model trained on LAION-5B, was therefore violating their copyright. People said that the images generated from the app featured mangled artists’ signatures and questioned the app’s claims that the images were made from scratch.

Deep Agency already seems to be facing a similar problem, with jumbled white text appearing at the bottom right corner of many of the images Motherboard has generated. The site says that people can use the generated photos anywhere and for anything, which seems to be part of its value proposition of being an affordable way to create realistic images when many photography websites such as Getty charge hundreds of dollars to use a single photo.

OpenAI CEO Sam Altman has warned many times about considering carefully what AI is used for. Last month, Altman tweeted that “although current-generation AI tools aren’t very scary, i think we are potentially not that far away from potentially scary ones. having time to understand what’s happening, how people want to use these tools, and how society can co-evolve is critical.” In this case, it’s interesting to see how an AI tool actually brings us backward and closer to an uninclusive cast of models.

Chambers from Storm said “AI will speed up and streamline many business processes and will find its place in our industry but we bet that, for the time being, real people will continue to lead and inspire!”

Danny Postma, the creator of Deep Agency, did not respond to Motherboard’s request for comment.

Joseph Cox contributed reporting.

Keep it Short

By KIM BELLARD OK, I admit it: I’m on Facebook. I still use Twitter – whoops, I mean X. I have an Instagram account but don’t think I’ve ever posted….

Asian Fund for Cancer Research announces Degron Therapeutics as the 2023 BRACE Award Venture Competition Winner

The Asian Fund for Cancer Research (AFCR) is pleased to announce that Degron Therapeutics was selected as the winner of the 2023 BRACE Award Venture Competition….

Seattle startup Olamedi building platform to automate health clinic communications

A new Seattle startup led by co-founders with experience in health tech is aiming to automate communication processes for healthcare clinics with its software…